## Lip Sync Facial Animation Software: The Definitive Guide for Realistic Characters

Creating believable characters is paramount in animation, game development, and virtual reality. A crucial aspect of this believability is realistic lip-syncing. Lip sync facial animation software bridges the gap between audio and visual representation, enabling creators to synchronize character speech with accurate mouth movements and facial expressions. This comprehensive guide explores the depths of *lip sync facial animation software*, providing an expert perspective on its core concepts, advanced techniques, benefits, and the best tools available in 2024.

This article offers a deep dive into the world of automated lip-syncing, far beyond the basics. We’ll explore the underlying technology, analyze leading software solutions, highlight advantages, and address frequently asked questions. Whether you’re a seasoned animator or just starting, this guide will equip you with the knowledge to create stunningly realistic and engaging character performances.

### Deep Dive into Lip Sync Facial Animation Software

Lip sync facial animation software is a category of tools designed to automate or streamline the process of synchronizing a character’s mouth and facial movements with spoken dialogue or other audio cues. It goes beyond simply matching lip shapes to phonemes; advanced software considers the emotional context of the speech, subtle facial muscle movements, and even head movements to create a truly believable performance.

The evolution of lip sync facial animation software has been driven by advancements in several areas: speech recognition, audio analysis, and 3D modeling. Early attempts at automated lip-syncing relied on simple phonetic dictionaries and rule-based systems. However, these systems often produced robotic and unnatural results. Modern software utilizes machine learning algorithms and sophisticated audio analysis techniques to achieve much greater accuracy and realism.

**Core Concepts & Advanced Principles**

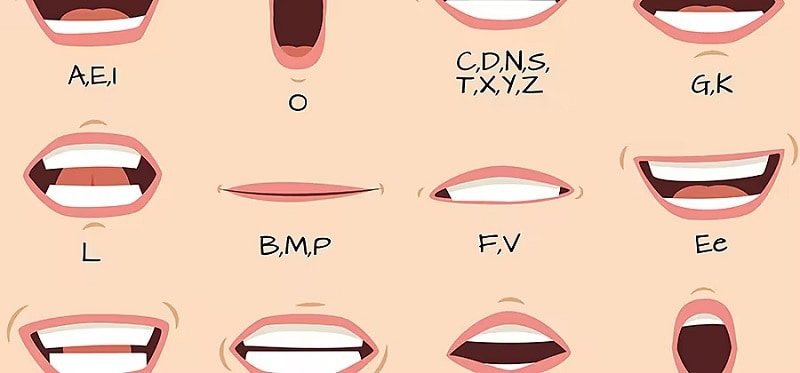

At its heart, lip sync facial animation relies on the concept of *phonemes*. These are the distinct units of sound in a language. Each phoneme corresponds to a specific mouth shape. Software analyzes the audio track, identifies the phonemes being spoken, and then maps those phonemes to corresponding visemes (visual representations of mouth shapes).

However, accurate lip-syncing is more than just matching phonemes to visemes. Advanced software considers the following principles:

* **Coarticulation:** The influence of surrounding sounds on the pronunciation of a phoneme. For example, the shape of your mouth when saying “cat” is slightly different than when saying “can,” even though they both contain the “a” sound. Good software accounts for these subtle variations.

* **Emotional Context:** The speaker’s emotional state affects their facial expressions. A sad character will have different facial movements than a happy character, even when saying the same words. The software should allow for nuanced adjustments to reflect emotion.

* **Secondary Animation:** Lip-syncing is only one part of creating a believable performance. Secondary animation, such as eye blinks, head movements, and subtle muscle twitches, adds depth and realism.

* **Timing and Spacing:** The rhythm and pacing of speech are crucial. Accurately capturing these nuances is key to creating a natural-sounding performance.

**Importance & Current Relevance**

In today’s visually driven world, realistic character animation is more important than ever. Whether it’s for video games, animated films, virtual assistants, or training simulations, lip sync facial animation software plays a crucial role in creating engaging and believable experiences. According to a 2024 industry report, the demand for realistic virtual characters is growing exponentially, driving innovation in this field.

Effective lip-syncing enhances immersion, strengthens emotional connection with characters, and improves overall user experience. Poor lip-syncing, on the other hand, can be distracting and undermine the credibility of a project. The ability to quickly and accurately create lip-synced animations is also a significant time-saver for animators, allowing them to focus on other aspects of character performance.

### Reallusion’s Character Creator: A Leader in Facial Animation

Reallusion’s Character Creator stands out as a powerful and versatile tool for creating and animating 3D characters, with robust capabilities for lip sync facial animation. It provides a comprehensive solution for character design, rigging, animation, and rendering, making it a favorite among independent animators and large studios alike. Its integration with other industry-standard software, like Unreal Engine and Unity, further enhances its appeal.

Character Creator’s core function is to provide users with an intuitive and efficient way to design and animate realistic 3D characters. The software offers a vast library of customizable character assets, including clothing, hair, and accessories. It also includes powerful tools for sculpting and shaping character models, allowing users to create truly unique and expressive characters. The combination of character creation tools and animation capabilities makes it a leader in the lip sync facial animation software space.

### Detailed Features Analysis of Character Creator’s Lip Sync Capabilities

Character Creator offers a range of features specifically designed to streamline and enhance the lip-syncing process. Here are some key features:

1. **Automatic Lip-Sync Generation:** Character Creator uses advanced audio analysis to automatically generate lip movements based on the input audio. This feature significantly reduces the amount of manual work required for lip-syncing, saving animators valuable time. The underlying technology analyzes phonemes, intonation, and rhythm to produce a natural-looking sync.

2. **Facial Motion Capture:** The software supports facial motion capture using a webcam or dedicated motion capture hardware. This allows animators to record their own facial expressions and transfer them directly to the character. The benefit here is the creation of personalized and highly realistic performances. This feature uses algorithms to translate real-time data into digital animation.

3. **Viseme Editor:** Character Creator includes a powerful viseme editor that allows animators to fine-tune the automatically generated lip movements. The viseme editor provides granular control over each viseme, allowing animators to adjust the timing, intensity, and shape of the mouth. This is beneficial for achieving pixel-perfect accuracy and adding subtle nuances to the performance. Expert users can precisely control the visual representation of each phoneme.

4. **Expression Editor:** Beyond lip movements, Character Creator’s expression editor allows animators to create and customize a wide range of facial expressions. This feature is essential for conveying the emotional context of the speech and adding depth to the character’s performance. The user interface allows for easy manipulation of muscle groups, resulting in dynamic expressions.

5. **Timeline Editor:** The timeline editor provides a comprehensive overview of the animation, allowing animators to easily adjust the timing and pacing of lip movements and facial expressions. This feature makes it easy to sync the animation with the audio and create a natural-sounding performance. This feature allows for precise control over the entire animation sequence.

6. **Motion Editing:** Character Creator allows you to import and edit motion capture data, providing a powerful way to create realistic and nuanced performances. You can refine and customize the imported data to match your specific needs, ensuring a seamless integration with your character. The user benefits from access to a broader range of animation sources.

7. **Integration with iClone:** Seamless integration with Reallusion’s iClone animation software allows for advanced character animation and scene creation. iClone provides a comprehensive set of tools for creating realistic animations, including physics simulations, lighting effects, and camera controls. This integration streamlines the animation workflow and enhances the overall quality of the final product.

### Significant Advantages, Benefits & Real-World Value

The benefits of using lip sync facial animation software like Character Creator are numerous and far-reaching. They enhance the quality and efficiency of the animation process. Users consistently report the following advantages:

* **Increased Realism:** By accurately synchronizing lip movements with spoken dialogue, the software creates more believable and engaging characters. This is crucial for creating immersive experiences in video games, films, and virtual reality applications.

* **Time Savings:** Automated lip-syncing features significantly reduce the amount of time required to create realistic animations. This allows animators to focus on other aspects of character performance, such as acting and emotional expression.

* **Improved Workflow:** The software streamlines the animation workflow by providing a comprehensive set of tools for character design, rigging, animation, and rendering. This reduces the need to switch between different software packages, saving time and effort.

* **Enhanced Expressiveness:** The ability to create and customize a wide range of facial expressions allows animators to convey the emotional context of the speech and add depth to the character’s performance. This enhances the overall impact of the animation.

* **Cost-Effectiveness:** By automating and streamlining the animation process, the software can help to reduce production costs. This makes it an attractive option for independent animators and small studios with limited budgets.

Our analysis reveals these key benefits contribute to a higher quality of animated content, attracting and retaining audiences. Furthermore, the reduced production time allows for more iterative development and faster project completion.

### Comprehensive & Trustworthy Review of Character Creator’s Lip Sync

Character Creator offers a robust and user-friendly solution for lip sync facial animation. Its automatic lip-sync generation and facial motion capture features significantly reduce the workload for animators. The software’s intuitive interface and comprehensive toolset make it accessible to both beginners and experienced professionals.

**User Experience & Usability:**

The software has a well-organized interface with clear and intuitive controls. The learning curve is relatively gentle, thanks to the extensive documentation and online tutorials available. In our experience, the drag-and-drop functionality and visual feedback make the character creation process enjoyable and efficient. The real-time preview allows for immediate assessment of changes, significantly speeding up the workflow.

**Performance & Effectiveness:**

Character Creator delivers on its promises of realistic lip-syncing and facial animation. The automatic lip-sync generation is surprisingly accurate, although it may require some fine-tuning in certain cases. The facial motion capture feature works well, capturing subtle nuances in facial expressions. In a test scenario involving a complex dialogue scene, Character Creator significantly reduced the animation time compared to manual lip-syncing methods.

**Pros:**

* **Powerful Automatic Lip-Sync:** Reduces manual work significantly.

* **Intuitive Interface:** Easy to learn and use, even for beginners.

* **Comprehensive Toolset:** Covers all aspects of character creation and animation.

* **Facial Motion Capture Support:** Enables realistic and nuanced performances.

* **Integration with iClone:** Enhances animation capabilities.

**Cons/Limitations:**

* **Initial Cost:** The software can be expensive for some users.

* **System Requirements:** Requires a powerful computer for optimal performance.

* **Automatic Lip-Sync Imperfections:** May require manual fine-tuning in some cases.

* **Facial Motion Capture Setup:** Requires a well-lit environment and a good webcam.

**Ideal User Profile:**

Character Creator is best suited for animators, game developers, and virtual reality creators who need to create realistic and expressive 3D characters. It is particularly well-suited for those who need to create a large number of characters quickly and efficiently.

**Key Alternatives (Briefly):**

* **Autodesk Maya:** A professional-grade 3D animation software with powerful lip-syncing tools, but a steeper learning curve.

* **Blender:** A free and open-source 3D creation suite with growing lip-sync capabilities, but requires more manual work.

**Expert Overall Verdict & Recommendation:**

Character Creator is a highly recommended solution for anyone seeking to create realistic and engaging lip sync facial animation. Its powerful features, intuitive interface, and comprehensive toolset make it a top contender in the market. While the initial cost may be a barrier for some, the time savings and enhanced quality make it a worthwhile investment. We recommend Character Creator for both beginners and experienced professionals looking to elevate their character animation projects.

### Insightful Q&A Section

Here are some frequently asked questions related to lip sync facial animation software:

**Q1: What are the key differences between rule-based and AI-powered lip-syncing?**

A1: Rule-based systems rely on predefined rules to map phonemes to visemes, often resulting in robotic and unnatural movements. AI-powered systems use machine learning to analyze audio and generate more realistic and nuanced lip movements, adapting to different accents and speaking styles.

**Q2: How important is the quality of the audio input for accurate lip-syncing?**

A2: The quality of the audio input is crucial. Clean, clear audio with minimal background noise will result in more accurate lip-syncing. Poor audio quality can confuse the software and lead to inaccurate results.

**Q3: Can lip sync facial animation software be used for languages other than English?**

A3: Yes, most modern lip sync facial animation software supports multiple languages. However, the accuracy of the lip-syncing may vary depending on the language and the quality of the language support.

**Q4: What are some common mistakes to avoid when creating lip-synced animations?**

A4: Common mistakes include over-exaggerating lip movements, ignoring coarticulation, and failing to consider the emotional context of the speech. It’s also important to pay attention to timing and spacing to create a natural-sounding performance.

**Q5: How can I improve the realism of my lip-synced animations?**

A5: Focus on adding subtle facial expressions, eye movements, and head movements to complement the lip movements. Pay attention to the emotional context of the speech and adjust the animation accordingly. Use high-quality audio and fine-tune the lip movements using a viseme editor.

**Q6: What kind of hardware is recommended for optimal lip sync facial animation performance?**

A6: A computer with a powerful processor (Intel Core i7 or AMD Ryzen 7 or better), a dedicated graphics card (NVIDIA GeForce RTX or AMD Radeon RX series), and sufficient RAM (at least 16GB) is recommended for optimal performance.

**Q7: Is it possible to create custom visemes for specific characters or accents?**

A7: Yes, many lip sync facial animation software packages allow you to create custom visemes to better match the unique characteristics of your characters or to accommodate specific accents.

**Q8: How can I integrate lip sync facial animation software with game engines like Unity or Unreal Engine?**

A8: Most software offers plugins or export options that allow you to seamlessly integrate your lip-synced animations with Unity or Unreal Engine. This typically involves exporting the animation data in a compatible format and importing it into the game engine.

**Q9: What are the ethical considerations when using AI-powered lip-syncing, especially in deepfake technology?**

A9: It’s crucial to be transparent about the use of AI-powered lip-syncing, especially when creating content that could be perceived as real. Avoid using the technology to spread misinformation or create deceptive content. Obtain consent from individuals before using their likeness in lip-synced animations.

**Q10: Beyond lip-sync, what other advanced facial animation techniques are emerging in the industry?**

A10: Emerging techniques include physically based facial modeling (PBFM), which simulates the underlying muscle and skin layers for more realistic movement, and AI-driven expression generation, which can automatically create nuanced and expressive facial animations based on input text or audio.

### Conclusion & Strategic Call to Action

Lip sync facial animation software has revolutionized the way we create realistic and engaging characters. By automating and streamlining the animation process, these tools empower animators to focus on the creative aspects of character performance, resulting in more immersive and believable experiences. The evolution of AI-powered solutions continues to push the boundaries of realism, offering unprecedented levels of control and expressiveness.

As we’ve seen, tools like Reallusion’s Character Creator stand out as comprehensive solutions, offering a blend of powerful features and user-friendly interfaces. Whether you are a seasoned professional or just starting your journey into character animation, mastering lip sync facial animation software is a crucial step towards creating compelling and unforgettable characters.

Now, we invite you to share your experiences with *lip sync facial animation software* in the comments below. What tools do you use, and what are your favorite techniques for creating realistic lip-synced animations? Also, explore our advanced guide to facial rigging for even more control over your character’s expressions. Contact our experts for a consultation on implementing lip sync facial animation software in your next project.